Now that summer is over, we’re back to our semi-regular updates from the tweasel project, where we are building a web app for fighting privacy violations in mobile apps. In this update, we are excited to announce that we have launched our open request database, where anyone can access and query the traffic data that we have collected from thousands of Android and iOS apps. We have also been busy documenting the trackers that we encounter in our data, using various sources and methods to identify the types of personal data that they transmit. Additionally, we have started doing legal research into how to establish tracker IDs as personal data and to prepare for writing our complaint templates.

TrackHAR and documentation

#-

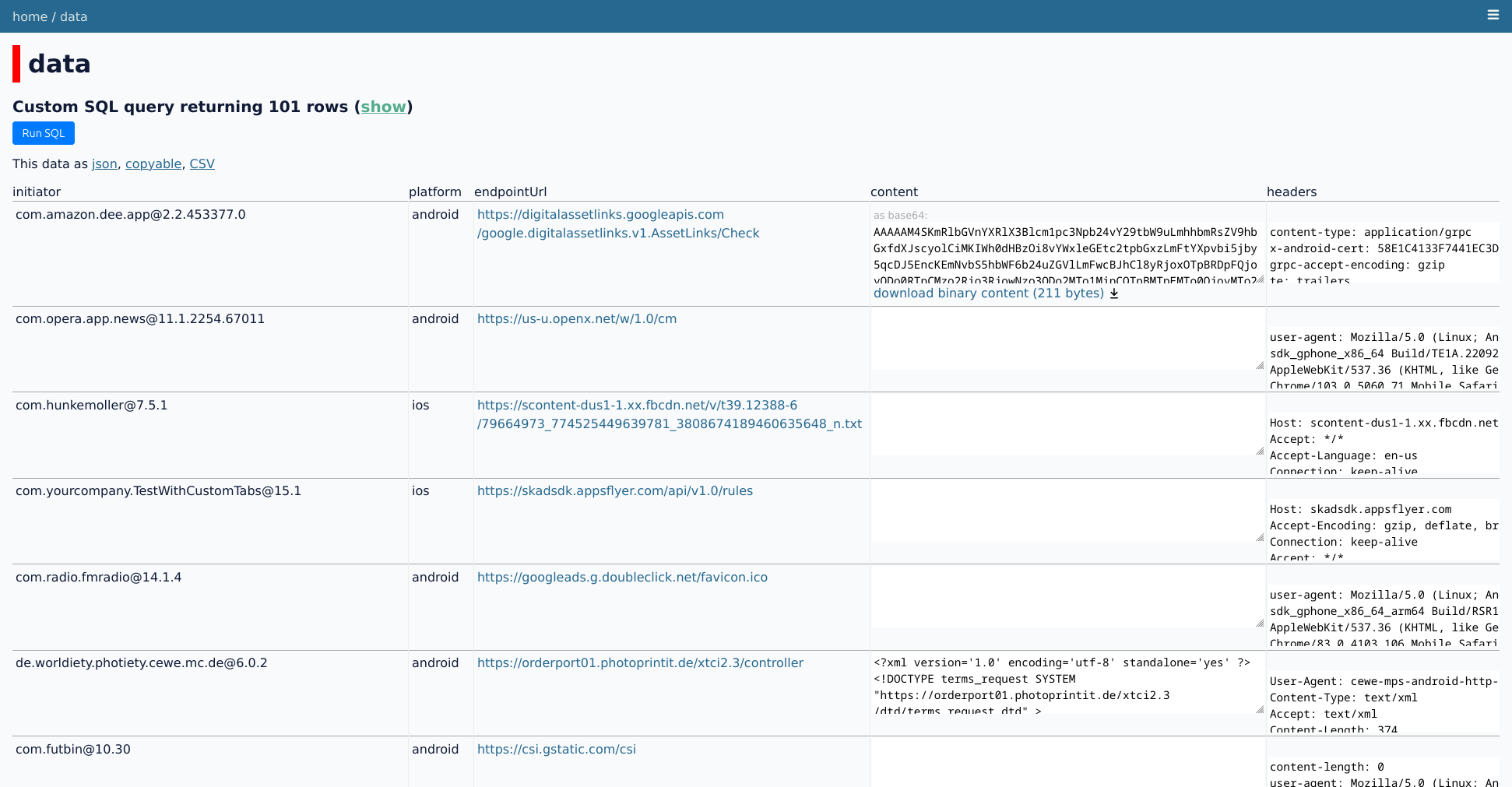

We have launched our open request database at data.tweasel.org. We regularly run traffic analyses on thousands of Android and iOS apps. As we want to enable as many people as possible to look into the inner workings of trackers, we are finally publishing our datasets for other researchers, activists, journalists, and anyone else who is interested in understanding tracking. There are already 250k requests from between January 2021 and July 2023, with more to come in the future.

To publish the data, we are using the phenomenal Datasette. We have installed a few plugins to provide even more features, and have written a custom plugin to enhance the display of requests in table views (source code, more details).

This setup allows anyone to run arbitrary SQL queries (enhanced with additional features by the plugins) against the data. We hope that this will enable others to do investigations that we couldn’t even think of. To give just a few (pretty basic) examples of the kinds of queries that can be run:

-

I’ve been busy backing up our adapters with proper documentation on the reasoning behind why we think properties contain certain data types. In the first prerelease of TrackHAR, we have called most associations “obvious” but we want to do better than that. This is especially important for the complaints later on. It is a very labor-intensive process but this is also one of the main benefits our the adapter approach: We can provide verifiable evidence for each bit of data that we claim was transmitted. In fact, I have found that a surprising number of the trackers (around half) actually have public documentation on these properties that we can reference. Obviously, the companies themselves admitting to which types of data they collect is the best evidence we can get.

But sometimes, such documentation is not available. We have a hierarchy of possible justifications that we go through in those cases: SDK source code, obvious property names, observed values match known device parameters, obvious observed values, experiments.

So far, I have produced documentation for the following trackers/adapters, adding missing properties and adapters along the way (though none of them are merged or released yet): Adjust (PR), branch.io v1 (PR), Bugsnag, Facebook (PR), ioam.de (PR), Microsoft App Center (PR), OneSignal (PR), singular.net (PR)

-

When I started the adapter work, we only had a very basic idea of how we actually wanted to document all this but I decided to just get my feet wet and gain a better understanding of the problem as I worked on it. That sparked some very in-depth discussions. To summarize the main conclusions:

- Where should the documentation be stored (in pads hosted somewhere, in GitHub issues, in Markdown files in some repo, …)?

We decided to keep the documentation as Markdown files in the TrackHAR repo, such that each page gets its own folder and each property its own file. We glue the sections together using Hugo in thetracker-wikirepo (which Lorenz has already implemented). This way, we can easily reference the reasoning for each property and generate links to the final documentation. - How should we archive the external links that we use as sources for our documentation?

This one is particularly tricky. We ideally want to use an established and trusted service like the Wayback Machine but that has issues with lots of the pages we tried (sites blocking the archiving resulting in broken snapshots, dynamic content not being captured properly). The only other public alternative is archive.today, which is heavily gated behind reCAPTCHA and cannot be automated. Meanwhile, the available self-hosted solutions all have their own problems, and we wouldn’t be able to host them publicly anyway due to copyright issues. In the end, we decided to create our own solution, keeping that as simple as possible:-

We store the original links in the

reasoningfield of the adapters, and have a file watcher that tries to archive them using the Save Page Now API of archive.org. Errors are recorded in a local file so we can retry using an exponential backoff strategy. Lorenz has mostly implemented this already.The archived links are stored in a CSV file in the TrackHAR repo and have redirects set up in a subfolder on

trackers.tweasel.org. -

If automatic archiving fails, we have to manually create a snapshot on a public archive, likely using archive.today and add that to the CSV.

-

We will manually take screenshots and PDFs of the pages, and upload them to a Nextcloud folder or similar. These can unfortunately not be hosted publicly.

-

We’ll create a linting script that warns us on commit if some of the links have not been archived.

-

- What do we even want to document, how in-depth do we want to go? Do we always need to reference official documentation if available?

We decided that we will only document things related to the properties in our adapters to avoid scope creep, even though there are a lot of (disturbingly) interesting admissions on tracker websites. It is fine to use “obvious” as a reasoning if the property name or value is self-explanatory. We do not need to seek out official documentation for every property, but we should update our documentation if we find it later. We also decided that we won’t document properties that are listed in official documentation but that we have not observed in our requests, as we only rely on the data we find. - How do we document the honey data that we use for our analysis?

We decided to document the honey data as JSON in thedatasetstable ofdata.tweasel.organd create a nice human-readable view using a plugin.

- Where should the documentation be stored (in pads hosted somewhere, in GitHub issues, in Markdown files in some repo, …)?

-

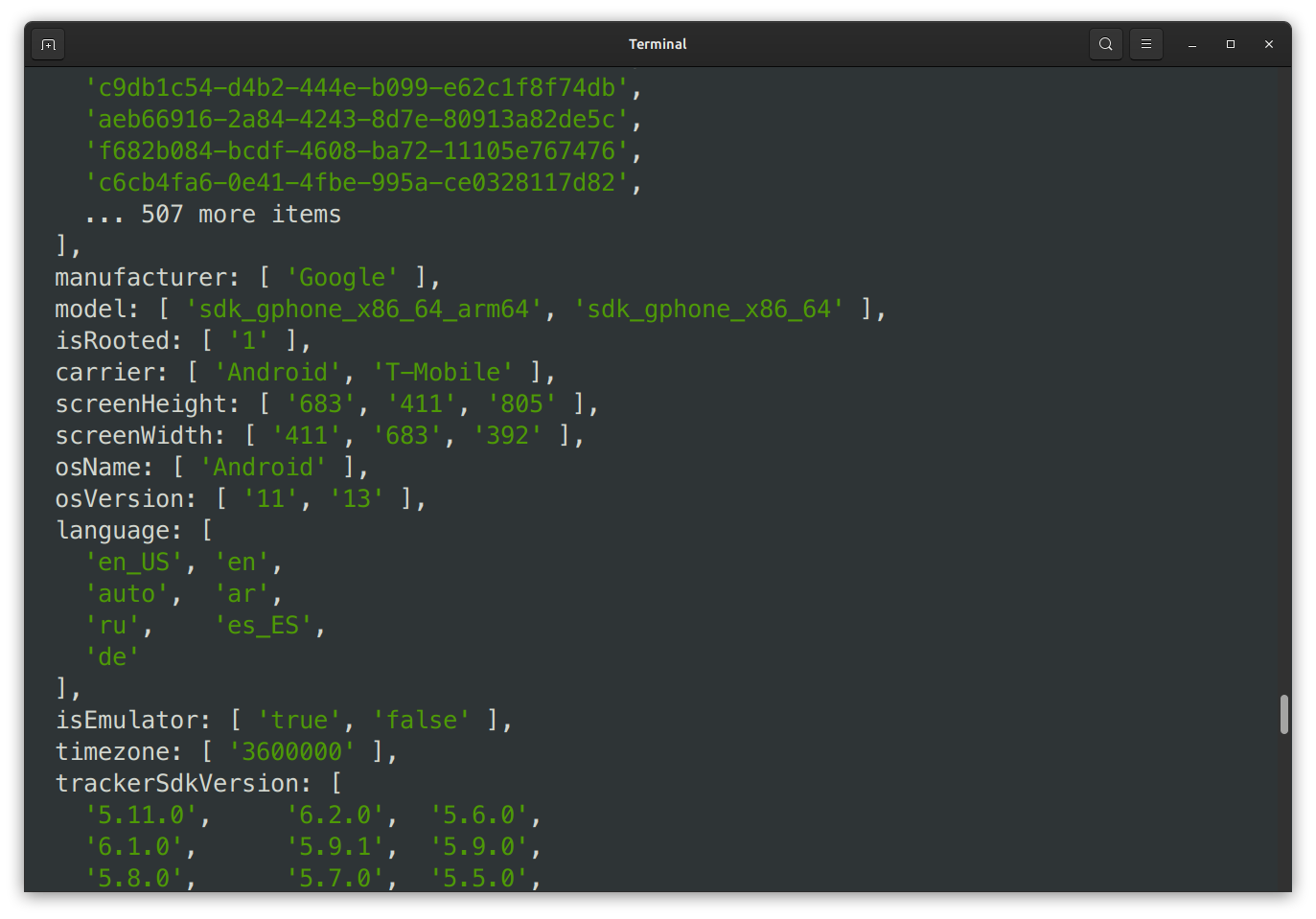

To enable the adapter work, I’ve written a script for debugging adapters that allows you to run an adapter against all matching requests in our request database. And as I said, that database (including its API) is public, so anyone can use this, not just us.

This is quite a powerful tool for ensuring that our adapters are correct—you can quite easily spot transmissions that don’t match your initial expectations and correct the adapter accordingly.

-

The adapter work has also given rise to quite a few architecture issues and questions for TrackHAR that we’ll have to consider:

- How do we deal with tracker-specific “empty” values?, e.g. if

idfa: noneis transmitted, we don’t want to identify an IDFA transmission. I’ve proposedonlyIfandnotIfregex filters inDataPath. - How fine-grained do we want to be with our categorisation of proprietary IDs?. Currently, they all end up as

otherIdentifiers, but there are various different types. In the complaints, we’ll have to argue for each one why it is personal data. Does that mean that we need a specific property and justification blurb for each possible ID? Or can we maybe get away with just having more granular ID types (session, device, user, installation, …)? - How do we represent transmitted events?

- I don’t think we should list the locale under

countryanymore. - I proposed renaming our current

idfaproperty toadvertisingId, andifdvtodeveloperScopedIdto avoid making them sound iOS-specific. - We should be more clever in recognizing endpoint URLs, by at least trimming trailing slashes.

- How do we deal with tracker-specific “empty” values?, e.g. if

-

Finally, I have started investigating Google’s tracking endpoints more thoroughly. Those are mostly not publicly documented and use Protobuf as the transmission format, which doesn’t contain any property names that we could rely on. I’ve made good progress on a new adapter for

firebaselogging-pa.googleapis.com, which is actually the fourth most common endpoint in our dataset (as you can see for yourself ondata.tweasel.org). I’ve discovered the actual Protobuf schemas for those scattered around various public Google repositories, which will allow us to definitively identify the transmitted properties.I’ve also started looking into

app-measurement.com, but not gotten too far yet.

Complaints

#-

I have started doing legal research, looking into relevant complaints, court submissions, decisions, rulings, recommendations, and legal commentary regarding tracking to inform our decisions on how we establish tracker IDs as personal data in our complaints and also prepare for writing our complaint templates. I have collected a comprehensive list of sources to go through. I am using Hypothes.is to organise and annotate them as I go along. Unfortunately, we cannot share these annotations publicly as the source material is copyrighted but I have documented my main conclusions and learnings.

Based on that research, I am also keeping lists of relevant decisions/judgements by DPAs and courts, complaints by others as a reference, and national implementations of Art. 5(3) ePrivacy directive.

Outreach

#-

We gave a (German) talk for Topio’s lecture series “human rights in the digital age”, aimed at a non-technical audience. Topio is a Berlin based non-profit that offers people free support on privacy topics. While the first half of the talk focused on datarequests.org, the second half was about our work into mobile tracking and what we’re doing with tweasel. Unfortunately, there is no recording but I created an annotated presentation (not merged yet), inspired by Simon Willison.

-

Fellow privacy NGO noyb filed their first three complaints against illegal tracking in mobile apps with the French CNIL, namely MyFitnessPal, Fnac and SeLoger. We were happy to contribute some insights from our research, which is also cited in the complaints. We are looking forward to see the results of these complaints and will be following them closely to inform our own complaints.

As we have mentioned before: If you’re also working against (mobile) tracking, absolutely do reach out so we can collaborate and share our knowledge!

Libraries

#- We have noticed that our tools and libraries are currently broken on Windows. We have identified at least two problems so far:

appstraction’spostinstallscript is broken and Android emulators don’t work. Lorenz has started work on the former, finding differences in command separators in *nix and Windows shells as the problem.

on

licensed under: Creative Commons Attribution 4.0 International License Tweasel update #4: Request database, tracker documentation and legal research